10 Product Discovery Techniques That Actually Work

TL;DR: Product Discovery Techniques in 30 Seconds

- 10 techniques organized by situation: "don't know the problem," "have assumptions," "need to validate," "stakeholders disagree"

- Decision matrix helps you pick the right technique based on team size, time, and what you need to learn

- 3 anti-patterns that waste discovery time: interview marathons, premature A/B tests, survey-only traps

- Technique combinations counter confirmation bias better than any single method

- Key insight: Pick the technique that matches your current uncertainty, not the one you're most comfortable with

Product discovery techniques are the specific methods product teams use to understand customer problems, test assumptions, and validate solutions before committing engineering resources. Most teams know they should do discovery. The problem is choosing the right technique for their situation.

According to Deloitte research, customer-centric companies are 60% more profitable than those that are not. Yet in my coaching experience, most teams default to customer interviews for everything. That is like using a hammer for every problem. It works sometimes, but you miss what a screwdriver, wrench, or saw could reveal.

"The first thing that comes to your mind is usually not the best thing."

— Product Bakery Podcast

This guide organizes 10 discovery techniques by the situation you face. Not by phase. Not alphabetically. Because the question is never "which technique exists?" The question is "which technique fits my problem right now?"

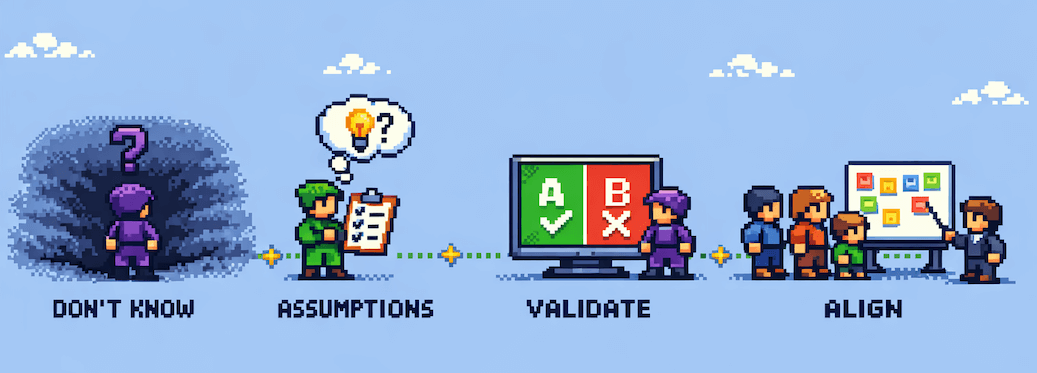

How to Choose the Right Discovery Technique

Before picking a technique, ask yourself: what type of uncertainty am I facing? The technique should match the uncertainty, not your team's comfort zone.

| Situation | Best Techniques | Team Size | Time Needed | What You Learn |

|---|---|---|---|---|

| Don't know the problem | Interviews, Observation, JTBD | 2-3 people | 1-2 weeks | Real problems, workarounds, unmet needs |

| Have assumptions to test | Prototyping, Surveys, Competitive Analysis | 2-4 people | 1-3 weeks | Validated or invalidated assumptions |

| Need to validate solutions | A/B Testing, Analytics, Story Mapping | 3-5 people | 2-4 weeks | Behavioral evidence, usage patterns |

| Stakeholders disagree | Assumption Mapping | 5-10 people | 90 minutes | Shared priorities, aligned next steps |

The pattern I see is that teams pick the technique they are comfortable with rather than the one matching their current uncertainty. A team full of researchers defaults to interviews. A data-heavy team defaults to analytics. Neither is wrong in isolation, but both miss what the other reveals. The best approach is choosing the right research approach based on what you need to learn, not what you already know how to do.

10 Product Discovery Techniques That Work

When You Don't Know the Problem

These techniques help when you are exploring a new space, entering a new market, or hearing conflicting signals about what users need.

1. Customer Interviews

One-on-one conversations with users or potential users, focused on understanding their problems, behaviors, and context. Interviews are the foundation of discovery. They only work when you ask about past behavior, not future intentions.

The key is asking "tell me about the last time you faced this problem" instead of "would you use this feature?" Past behavior predicts future behavior. Hypothetical questions predict nothing. For practical guidance on what to ask, explore these 50+ interview questions for deeper insights.

When it works: Early problem exploration, new market entry, validating whether a problem exists.

When it fails: When you run 30+ interviews without synthesizing between rounds (see Anti-Patterns below).

2. Observational Studies

Watching users in their natural environment as they work through real tasks. You sit next to them (physically or via screen share), stay quiet, and take notes. The goal is to see the workarounds, frustrations, and behaviors that users would never mention in an interview because they have normalized them.

Observation reveals the gap between what people say and what they do. A user might tell you their process takes "about 10 minutes." Observation reveals it takes 45 minutes with 12 context switches. That gap is where product opportunities live. For a framework on combining observation with interviews, see structured customer development.

When it works: Complex workflows, B2B products, understanding context and environment.

When it fails: When you observe without a clear research question (you will drown in data).

3. Jobs-to-be-Done Interviews

A specialized interview technique that explores the underlying motivation behind user behavior. Instead of asking what features users want, JTBD asks: what job are you hiring this product to do? What progress are you trying to make in your life or work?

"If stakeholders approach with 'we need feature X,' ask WHY."

— Product Bakery Podcast

In my coaching experience, JTBD interviews are the single fastest way to shift a team from feature-first to problem-first thinking. When a B2B team I coached stopped asking "what features do you need?" and started asking "what progress are you trying to make?", they discovered their users did not want a better dashboard. They wanted to spend less time in dashboards so they could do their actual work.

When it works: Understanding switching behavior, uncovering hidden motivations, reframing feature requests.

When it fails: When interviewers keep reverting to standard feature questions instead of probing motivation.

When You Have Assumptions to Test

These techniques help when your team has ideas about what to build but needs evidence before committing resources.

4. Prototyping

Creating low-cost versions of your solution (from paper sketches to clickable Figma mockups) and putting them in front of users. Prototyping is not about building a demo. It is about creating something just real enough to learn from.

Match the prototype fidelity to your question. Testing whether a concept resonates? Paper sketches work. Testing whether users can navigate a flow? A clickable wireframe. Testing visual design details? High-fidelity mockup. The mistake is going high-fidelity too early. Users critique polish instead of evaluating the concept. Pair prototypes with usability tests to get the most actionable feedback.

When it works: Validating solution concepts before development, comparing design directions, reducing build risk.

When it fails: When the prototype is so polished that users think it is a finished product and hold back honest feedback.

5. Surveys

Structured questionnaires distributed to a larger user base for quantitative validation. Surveys answer "how many" after interviews answer "why." They are powerful for quantifying patterns you have already observed, but dangerous as a starting point.

"You should never ask a user 'did you ever try to press this button?'"

— Product Bakery Podcast

That principle applies doubly to surveys. You can only ask about things you already know about. Surveys cannot reveal problems you have not imagined. Use them to measure the frequency and severity of problems you have discovered through qualitative methods, never as your only discovery technique.

When it works: Quantifying known pain points, measuring satisfaction, prioritizing features by user demand.

When it fails: When used as the sole discovery method (see Anti-Patterns below).

6. Competitive Analysis

Systematically evaluating competitor products to identify gaps, borrowed solutions, and market expectations. Go beyond feature comparisons: read competitor reviews, analyze their pricing pages, sign up for their free trials, and read the support forums where users complain.

The most valuable competitive insights come from user frustration, not feature lists. One-star reviews and support forum complaints reveal what competitors are getting wrong. Those gaps become your opportunities.

When it works: Market entry, positioning decisions, identifying table-stakes features.

When it fails: When you copy competitor features without understanding whether your users have the same problems.

When You Need to Validate Solutions

These techniques help when you have built something (or shipped a change) and need to measure whether it actually works.

7. A/B Testing

Comparing two or more versions of something with real users to measure which performs better on a specific metric. A/B testing eliminates opinion from design decisions. The data decides.

But A/B testing has prerequisites most teams ignore. You need sufficient traffic for statistical significance. You need a clear hypothesis. And you need the problem to be well-defined. Testing button colors when the underlying flow is broken is like rearranging furniture in a burning building.

When it works: Optimizing existing features with enough traffic, settling design debates with data, iterative improvement.

When it fails: When you test before understanding the problem (see Anti-Patterns below).

8. Analytics Review

Examining your product's quantitative usage data: funnels, drop-off rates, feature adoption, and session durations. The goal is understanding what users actually do versus what you assume they do.

Analytics tell you what is happening but not why. A 70% drop-off on step 3 of onboarding is a signal, not an answer. Pair analytics with qualitative techniques (interviews, session recordings) to understand the reason behind the numbers. The combination of "what" (analytics) and "why" (qualitative) is the most powerful discovery approach available.

When it works: Identifying friction points, measuring feature adoption, tracking trends over time.

When it fails: When you interpret numbers without talking to users about the context behind them.

9. User Story Mapping

A collaborative technique where the team maps out the user's journey across your product, step by step, to identify gaps, redundancies, and priorities. It creates a shared visual understanding of the user experience that spreadsheets and backlogs cannot provide.

Story mapping is particularly powerful as a bridge between discovery and delivery. It translates discovery insights into development-ready work by showing which parts of the user journey matter most. For a practical walkthrough, read about running a user story mapping session.

When it works: Release planning, aligning cross-functional teams, identifying MVP scope.

When it fails: When treated as a one-time exercise instead of a living document.

When Stakeholders Disagree

This technique helps when your team has conflicting opinions about what to build, which problem to solve, or where to focus.

10. Assumption Mapping

A facilitated workshop where the team lists every assumption underlying their product decisions, then plots each assumption on a matrix of "how critical is this?" versus "how much evidence do we have?" The result is a prioritized list of assumptions that need testing, starting with the most critical and least validated.

I coached a B2B SaaS team that had been stuck in a planning loop for six weeks. Everyone had a different opinion about what to build next, and every meeting ended in deadlock. We ran an assumption mapping session and listed 14 untested assumptions that the team was treating as facts. Within 90 minutes, the team agreed on which three assumptions were both the most critical and the least validated. They designed experiments for all three that week and had answers within two weeks. The six-week deadlock broke in a single afternoon.

When it works: Misaligned teams, high-stakes decisions, breaking analysis paralysis.

When it fails: When the facilitator does not enforce honest assessment of evidence quality.

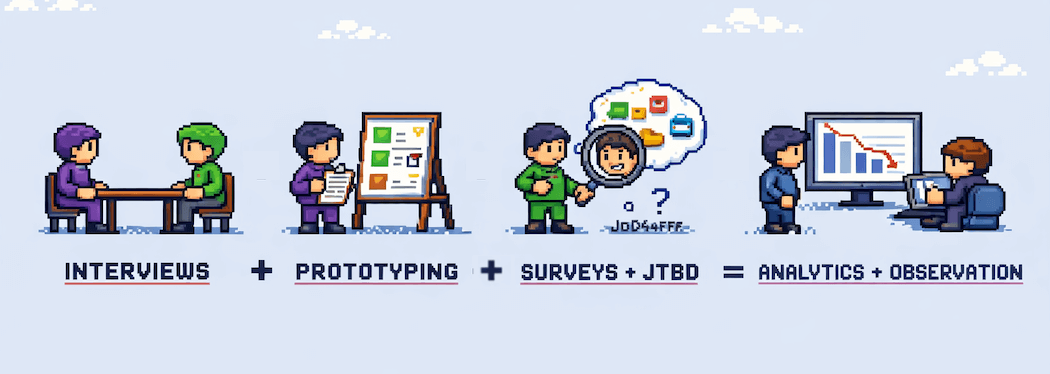

Technique Combinations That Work

No single technique gives you the full picture. The real power of discovery comes from combining methods that compensate for each other's blind spots.

"Average human has almost 600 biases. The biggest one is confirmation bias."

— Product Bakery Podcast

Confirmation bias is exactly why combinations matter. If you only interview users, you hear what they say but miss what they do. If you only run analytics, you see behavior patterns but cannot explain them. Combining techniques creates triangulation. When multiple methods point to the same conclusion, you can trust it.

Three proven pairings:

- Interviews + Prototyping: Interviews reveal the problem, prototyping tests whether your solution addresses it. Run interviews first, then prototype and test with a new set of users. This pairing prevents you from building solutions to problems you imagined.

- Surveys + JTBD: JTBD interviews reveal motivations from a small sample. Surveys quantify how widespread those motivations are. Use JTBD first to discover the categories, then surveys to measure the distribution.

- Analytics + Observation: Analytics show you where users drop off. Observation shows you why. Pull your top 3 drop-off points from analytics, then observe 5 users going through those exact flows.

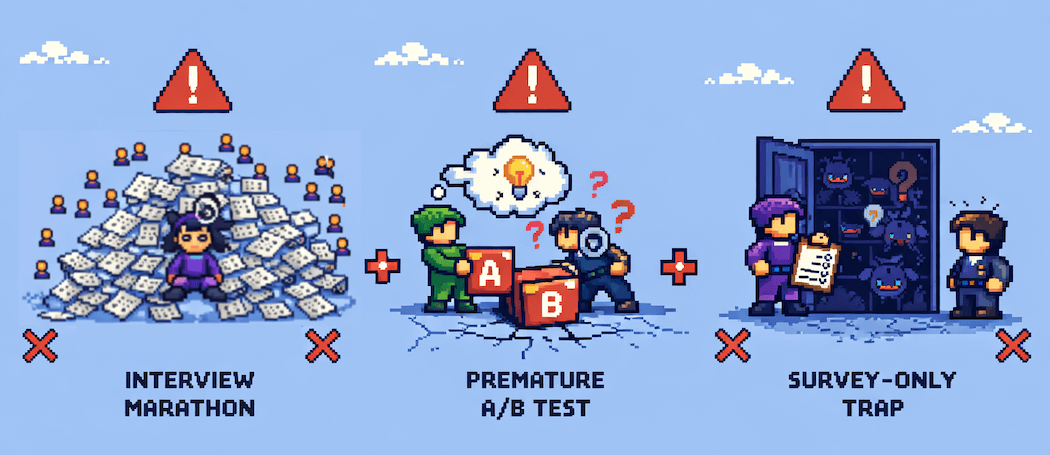

When Techniques Fail: 3 Anti-Patterns

Techniques are only as good as their execution. Here are the three most common ways teams misuse discovery techniques.

1. The Interview Marathon. Running 30+ interviews without stopping to synthesize. By interview 15, you have forgotten what user 3 said. By interview 25, you are hearing everything through the lens of your own emerging narrative. The fix: synthesize after every 5 interviews. Look for patterns. Adjust your questions. Five well-analyzed interviews beat 30 interviews sitting in unreviewed transcripts.

2. The Premature A/B Test. Testing variations of a solution before understanding the problem. A team I coached A/B tested four different onboarding flows. All four performed poorly. The issue was not the flow design. The issue was that users did not understand the product's value proposition. No amount of flow optimization fixes a positioning problem. The fix: always pair A/B tests with qualitative understanding of what users are struggling with.

3. The Survey-Only Trap. Using surveys as the sole discovery method. Surveys can only validate what you already know to ask about. They cannot reveal problems you have not imagined, workarounds you have not observed, or motivations you have not explored. A team that surveys 1,000 users without first interviewing 10 will get statistically significant answers to the wrong questions. The fix: always start with qualitative methods to discover what to measure, then use surveys to measure it.

Getting Started

You do not need to master all 10 techniques. Start with one from each situation category and build from there. If you have never done structured discovery before, start with customer interviews and assumption mapping. They require the least tooling and produce the fastest insights.

For the broader discovery process including frameworks, workshops, and continuous habits, read my complete product discovery guide. If you want to go deeper on research execution, the step-by-step testing guide walks you through running sessions.

The goal of discovery is not to run perfect research. The goal is to reduce the risk that you build something nobody needs. Even imperfect discovery (three quick interviews and a sketched prototype) beats building on assumptions alone.

You can learn more about my coaching background, or if your team needs help building a consistent discovery practice, explore my product coaching services.